RL Isn't Enough:

There has been a plethora of tricks and heuristics that have boosted LLM performance. In particular, Reinforcement Learning (RL) has surged in popularity thanks in part to DeepSeek-R1 and its tuning via GRPO. However, I'm betting that RL alone won't suffice to achieve AGI. At its core, RL assumes a world model. It works in domains like Go or chess, where the rules are fixed, the state space is combinatorially constrained, and all the niceties required by RL environments are guaranteed.

Dynamic programming (a la Dimitri P. Bertsekas3 ) certainly influenced modern RL, but when "the world is fuzzy"-when ground rules aren't set in stone, constraints aren't guaranteed or composable-pure, then it's known that approximate dynamic programming struggles (and similarly RL struggles). 5 This is why, even today, we typically pretrain an LLM and then impose an RL-based policy on top, versus doing RL language modeling from scratch. Yet I suspect that pipeline alone will still fall short of AGI.

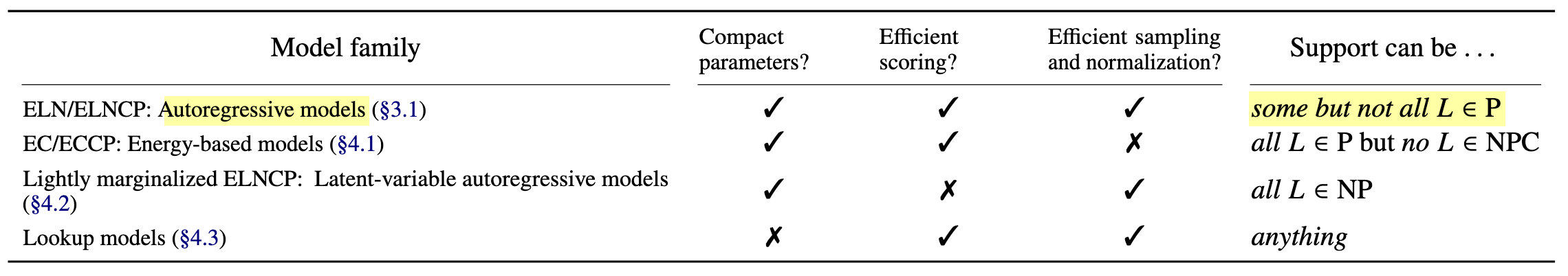

Lin et al. (2021) shows that any autoregressive model whose parameter count and compute scale only polynomially with sequence length cannot express all languages in the class P (P for polynomial, NP for non-deterministic polynomial, ect.).1 In fact, standard autoregressive models, despite their impressive empirical successes can only capture some languages in P. There exist decision problems in P where no such autoregressive model can represent it without allowing super-polynomial growth in parameters of the model.1

As a consequence, we can't rely on polynomial-sized, purely autoregressive LLMs to capture every efficiently decidable language, nor to serve as oracle solvers for all polynomial-time problems. Bootstrapping RL to an autoregressive can help somewhat but you're still limited by the LLM backbone. To break through toward AGI, we'll need architectures that either sacrifice some efficiency such as energy-based or semiparametric "lookup" models or introduce latent structure in a way that light marginalization can escape these expressivity limits. Continuous progress in hybridizing LLMs with external memories, solvers, or dynamic latent variables will likely be essential steps on the path beyond what RL + LLM alone can deliver.

Some Hope (Also my bias):

There is a hybridization between energy-based models and lightly marginalized latent-variable models that I suspect can help. I had the fortune of working with Deqian Kong on the Latent Plan Transformer (2024), which learns to compress high-dimensional trajectory data into compact latent plans, enabling efficient long-horizon planning via latent-space inference4. This then evolved into the Latent Thought Model (2025), which adds dynamic latent reasoning steps, allowing the model to refine and adapt its latent decisions yielding stronger performance on complex sequential tasks5. Basically we can think of an LLM as learning p(y|x), i.e. the probability of the next token y given the previous n-1 tokens x. But the trick is we may express this as follows $$ p(y|x) = \int p(z | x)p(y | z, x) dz $$ The intuition for doing this is as follows, for any sequence x, you can have rough plan or idea z. However, it's possible that this x is out of distribution (this will always be the case in real life since future data always evolves). Then you need to perform some posterior optimization to adjust your plan/thought given the current environment (notice that this helps when the environment is fuzzy.). The other step you can perform is given x you condition on your formulated plan/thought z to then predict y. So the lesson here is that as long as you're will to do some posterior optimization on a low-dimensional latent z you can better handle when x is out of distribution. As far as I'm aware, RL can't handle this situation. RL isn't enough.

How to cite this blog

@article{lizarraga2025rlisntenough,

title = {RL Isn't Enough},

author = {Andrew Lizarraga},

journal = {https://drewrl3v.github.io/},

year = {2025},

month = {April},

url = {https://drewrl3v.github.io/blogs/april2025.html}

}

References

- Lin, Chu-Cheng, Jaech, Aaron, Li, Xin, Gormley, Matthew R., & Eisner, Jason. (2021). Limitations of Autoregressive Models and Their Alternatives. Proceedings of the 2021 Conference on Empirical Methods in NLP. arXiv:2010.11939.

- Bertsekas, Dimitri P. (1995). Dynamic Programming and Optimal Control (2nd ed.). Athena Scientific.

- Kong, Deqian, Xu, Dehong, Zhao, Minglu, Pang, Bo, Xie, Jianwen, Lizarraga, Andrew, Huang, Yuhao, & Xie, Sirui. (2024). Latent Plan Transformer for Trajectory Abstraction: Planning as Latent Space Inference. In Advances in Neural Information Processing Systems, 37, 123379-123401. https://proceedings.neurips.cc.

- Deqian Kong and Minglu Zhao and Dehong Xu and Bo Pang and Shu Wang and Edouardo Honig and Zhangzhang Si and Chuan Li and Jianwen Xie and Sirui Xie and Ying Nian Wu (2025). Scalable Language Models with Posterior Inference of Latent Thought Vectors. arXiv:2502.01567.

- Gabillon, Victor and Ghavamzadeh, Mohammad and Scherrer, Bruno. (2013). Approximate Dynamic Programming Finally Performs Well in the Game of Tetris. In Advances in Neural Information Processing Systems, https://proceedings.neurips.cc.